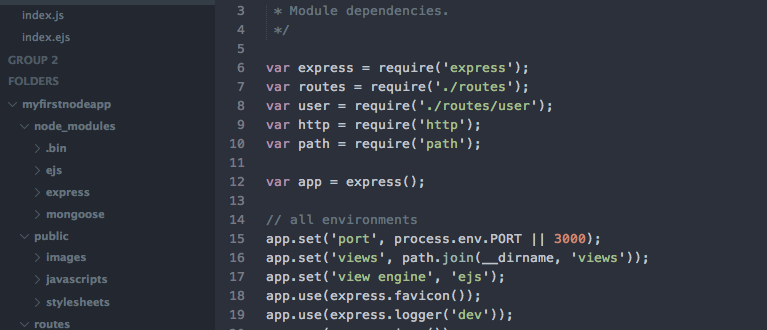

You’re no stranger to locally hosted php. Especially using MAMP or XMPP. Here is how you get started locally with NodeJS.

Getting started setting up your first node.js app up locally on OS X is so easy it makes my eyes tingle. I have always developed on a Mac, so if you are interested in windows, it’s probably best to find someone who specializes in that. If this doesn’t concern you, read on!

Continue reading “Beginning NodeJS for PHP Devs: Getting Started Locally”